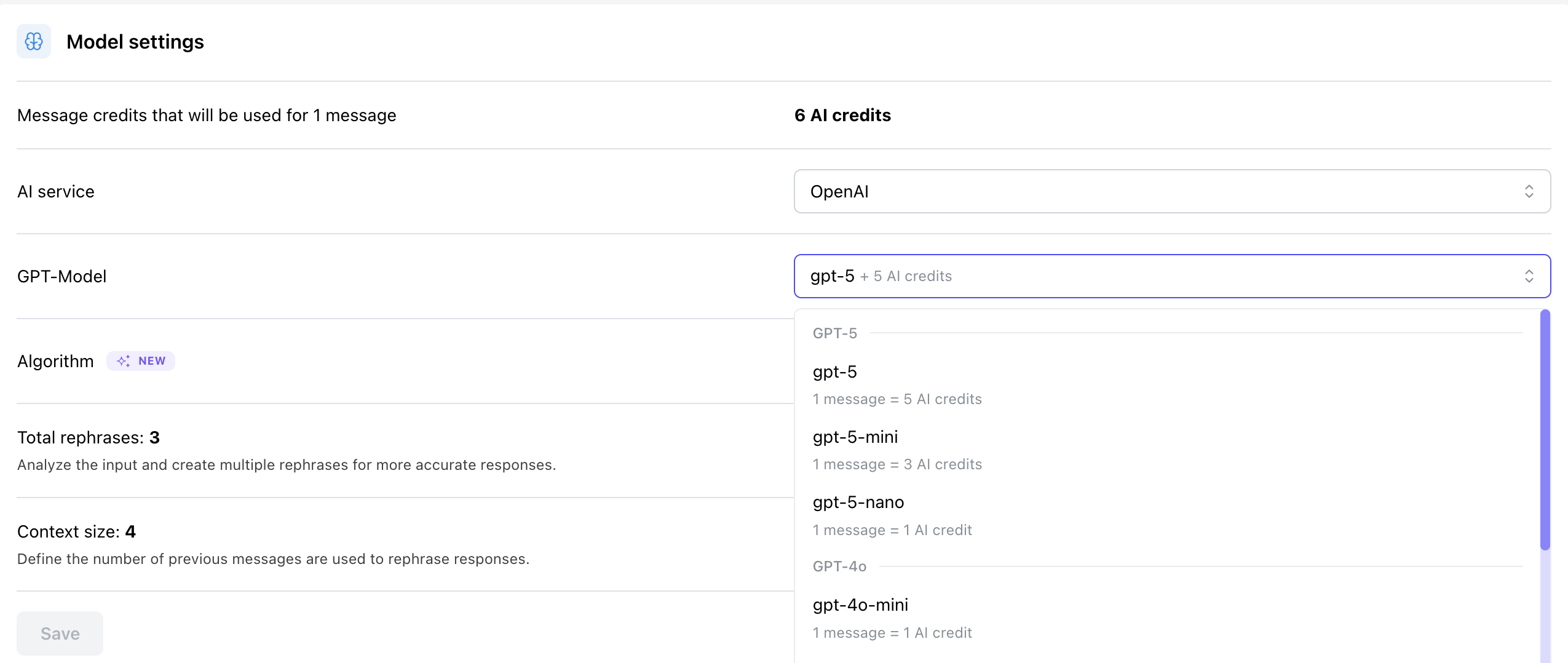

Choosing your LLM model, context-window and algorithm

Chathive offers the possibility to change your LLM model, the context window and the algorithm of how this model is used. This guide will help you decide what configuration is best for your use case.

Default configuration

Whenever you create a project in Chathive your project will be set to these settings:

Setting | Default value |

|---|---|

Model | GPT 4o-mini |

Context size | 8k (growth tier and higher) or 4k (free trial or basic tier) |

Algorithm | Default |

Although these are usually good defaults, you can greatly improve performance by choosing these settings carefully based on your goals.

How to choose an LLM model

All LLM models have its strengths and weaknesses, you will always make a trade-off based on your choice. However, your choice should be decided by these 5 factors:

Accuracy: Models with more parameters and a larger training dataset (like GPT-5) tend to be a lot more accurate than less complex and smaller models (like GPT-5-mini or gpt-5-nano). If accuracy and reasoning is important, choose a more complex model.

Speed of generating responses: More complex models tend to require more compute and are as a result slower in generating text. For example, GPT-5-nano is quicker than GPT-5.

Steerability: More complex models generally follow instructions better than less complex ones. If strict adherence to instructions is important, choose a more steerable model. Although more accurate models are typically more steerable, this is not always the case.

Tool usage: Some models excel at using tools, with more complex and newer models generally performing better.

Price: More complex models also tend to be more expensive to run, and require more message credits to run as a result.

Model comparison

To help you decide, we have put all models into a table for you to compare and make your choice.

Model Group | Model | Credits | 🎯 Accuracy | 🧭 Steerability | 🛠 Tool Usage | ⚡ Speed | Reasoning |

|---|---|---|---|---|---|---|---|

GPT-5 | GPT-5 | 5 | 🎯🎯🎯🎯🎯 5 | 🧭🧭🧭🧭🧭 5 | 🛠🛠🛠🛠🛠 5 | ⚡⚡⚡ 3 | ✓ (minimal by default) |

GPT-5 Mini | 3 | 🎯🎯🎯🎯 4 | 🧭🧭🧭🧭 4 | 🛠🛠🛠🛠 4 | ⚡⚡⚡⚡ 4 | ✓ (minimal by default) | |

GPT-5 Nano | 1 | 🎯🎯🎯 3 | 🧭🧭🧭 3 | 🛠🛠🛠🛠 4 | ⚡⚡⚡⚡⚡ 5 | ✓ (minimal by default) | |

GPT-4o | GPT-4o | 5 | 🎯🎯🎯🎯 4 | 🧭🧭🧭 3 | 🛠🛠🛠 3 | ⚡⚡ 2 | ✗ |

GPT-4o Mini | 1 | 🎯🎯 2 | 🧭🧭 2 | 🛠🛠 2 | ⚡⚡⚡⚡ 4 | ✗ | |

GPT-4.1 | GPT-4.1 | 4 | 🎯🎯🎯 3 | 🧭🧭🧭🧭 4 | 🛠🛠🛠🛠 4 | ⚡⚡ 2 | ✗ |

GPT-4.1 Mini | 3 | 🎯🎯🎯 3 | 🧭🧭🧭 3 | 🛠🛠🛠 3 | ⚡⚡⚡ 3 | ✗ | |

GPT-4.1 Nano | 0.5 | 🎯🎯 2 | 🧭🧭 2 | 🛠🛠🛠 3 | ⚡⚡⚡⚡ 4 | ✗ | |

Reasoning | o3 Mini | 12 | 🎯🎯🎯🎯 4 | 🧭🧭 2 | 🛠🛠🛠 3 | ⚡ 1 | ✓ (medium by default) |

Deprecated | GPT-4 | 60 | 🎯 1 | 🧭 1 | 🛠 1 | ⚡ 1 | ✗ |

GPT-4 Turbo | 10 | 🎯🎯 2 | 🧭 1 | 🛠🛠 2 | ⚡⚡ 2 | ✗ |

We generally recommend to use GPT-5 for most use cases that require a high level of accuracy. And recommend GPT-5 nano if you need quick replies and/or if you have a very large volume and need to cut costs.

Choosing your context window

This can only be changed by Chathive team members, request via support if you want to change this.

Chathive allows you to choose how large of a context window to use for each message. A larger context window allows a larger amount of text from your sources for each message. It also allows longer questions to and longer replies from the AI assistant.

Larger context window for higher accuracy

We mostly recommend increasing context window for increasing accuracy of your AI assistant. With a larger context window we can provide larger snippets of text from your sources in each response. This means the model has more input to work with and tends to improve accuracy.

These are the ways a larger context window is more accurate:

More complete snippets from your sources: Smaller context windows only allow us to include small snippets of your training data as sources. Larger context windows allow us to include larger snippets, thus improving the chance that the full needed context is there for creating the answer.

Using more sources: Smaller context windows also limit the amount of sources we can include. And as a result have more chance of not including the right sources needed.

Long questions, hogging up space for sources: The longer the question of the user the less space we have for questions. If your questions are large, it will greatly reduce performance in smaller context sizes.

More conversation history: To fit as many sources as we can, we cut the conversation history short if we need that space for the sources. This results in the model knowing less of the past conversation when answering the question. Increasing context size will let the AI assistant retain more memory of the conversation.

Longer responses: Sometimes you need a long explanation for complex cases, more context allows the AI assitant. to create longer responses.

Changing context size does have pricing implications!

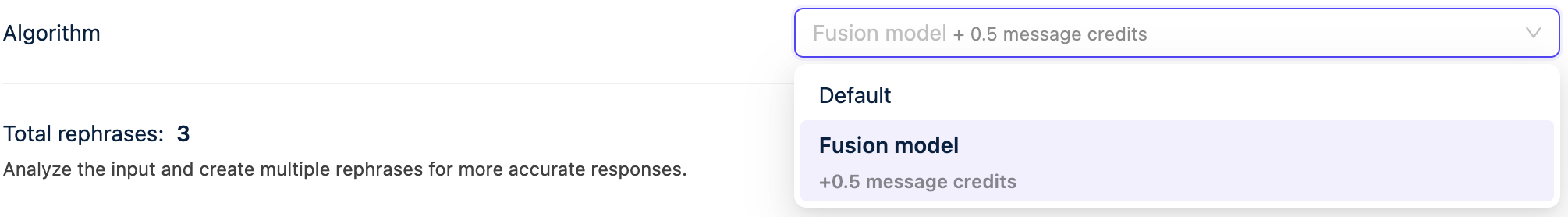

Choosing your algorithm

The last step is to decide on the algorithm to use. Algorithms are ways the model and context window is used to find sources and generate responses.

Currently, we offer 2 distinct algorithms:

Default: Use the question to find sources and feed those into the model.

Fusion algorithm (recommended): Rephrases the question a few times and uses all those rephrasings to search the database. This algorithm greatly improves the search results if the user uses different words or phrasings than are used in your AI database. It slightly increases the time it takes for the AI to start responding, as it first has to rephrase and only then can start responding.

You can view the pricing implications at our mesage credits documentation as well.